Data Preparation for Duplicate Detection

Jul 1, 2020· ,,·

1 min read

,,·

1 min read

Ioannis Koumarelas, PhD

Lan Jiang

Felix Naumann

Abstract

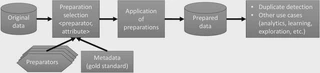

Data errors represent a major issue in most application workflows. Before any important task can take place, a certain data quality has to be guaranteed by eliminating the various errors that may appear in data. Typically, most of these errors are fixed with data preparation methods, such as whitespace removal. However, the particular error of duplicate records—where multiple records refer to the same entity—is usually eliminated independently with specialized techniques.

Our work is the first to bring these two areas together by applying data preparation operations in a systematic approach before performing duplicate detection. Our workflow begins with the user providing as input a sample of the gold standard, the actual dataset, and optionally domain-specific constraints for certain data preparations (e.g., address normalization).

The selection of data preparations works in two phases. First, to drastically reduce the search space of ineffective preparations, decisions are made based on the improvement or deterioration of pair similarities. Second, using the remaining preparations, an iterative leave-one-out classification process removes them one by one and determines redundant preparations based on the achieved area under the precision–recall curve (AUC-PR). Using this workflow, we improve duplicate detection results by up to 19% in AUC-PR.

Type

Publication

In ACM Journal of Data and Information Quality, 2020

Note

Click the Cite button above to enable visitors to import publication metadata into their reference management software.

Note

Create your slides in Markdown - click the Slides button to check out the example.

Add supplementary notes, full text, or examples here. You can include code, math, and images.